Executive summary : How to get the Caps-Lock key to act as the mod-key in Xmonad when Xmonad is running in a VirtualBox on a OS X host. Or, if the previous sentence is gibberish to you, how to remap keyboard keys on Macs and in Linux.

I did this once on my MacBook and then it stopped working when I upgraded the OS by which point I had completely forgotten what I had done. So now I’m redoing it and documenting it here for posterity.

I’ll start with the short turbo-version for the impatient, a more detailed explanation will follow after.

The short turbo-version for the impatient

- On the Mac, go into System Preferences -> Keyboard -> Modifier Keys and set Caps-Lock to “No Action”.

- Get the OS X utility “Seil”. Use it the change the Caps-Lock key so it generates the PC_APPLICATION KeyCode (which is value 110).

- Go into Linux on your VirtualBox. In my case the PC_APPLICATION KeyCode was translated into KeyCode 135 in X. Your Linux might change it to something else. You can run the “xev” program in a terminal in Linux and then press Caps-Lock to see which value it generates. Look for “keycode” in the output when you press Caps-Lock. If it generates something different from 135 then replace 135 with your keycode in the steps below.

- Take the keycode you figured out in the previous step and check which keysym it generates by running

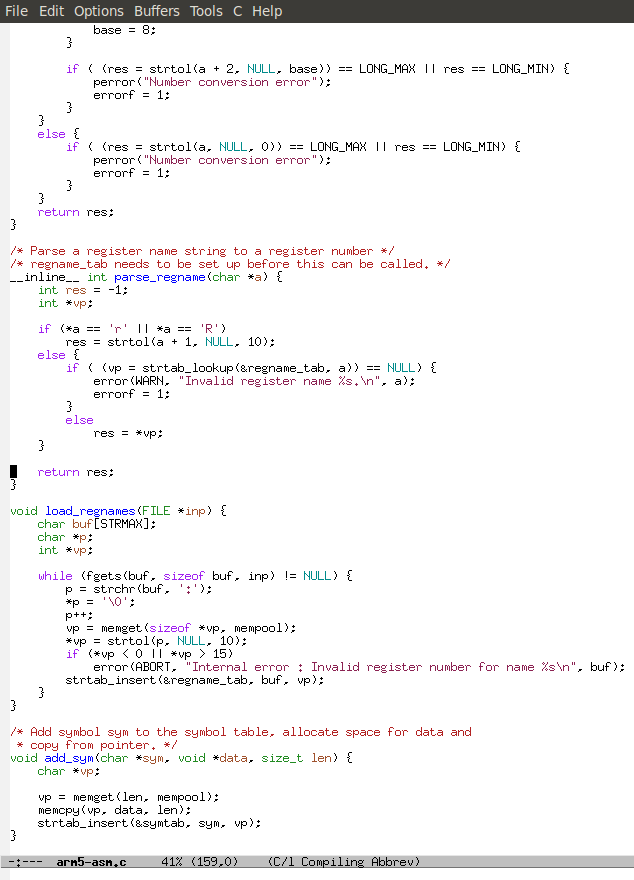

# xmodmap -pke | grep 135 keycode 135 = Menu NoSymbol Menu

In my case the keycode maps to the keysym “Menu”.

- Add the keysym from the previous step to modifier mod3 by running the following in a Linux terminal :

xmodmap -e “add mod3 = Menu”

- Turn of key repeat for keycode 135 by running

xset -r 135

- Test that everything works by running “xev” in a Linux terminal,

mousing over the created window and pressing the Caps-Lock key. You should see something like “KeyPress event … keycode 135 (keysym 0xffeb, Menu)” when pressing the key, no repeats while the key is being held down, and a corresponding “KeyRelease event…” when the key is released. - Get xmonad to use the mod3 modifier as the “mod” key by adding the following : “modMask = mod3Mask,” to your config in .xmonad/xmonad.hs

If you wanted to do exactly this, and it worked, and you don’t have an unhealthy compulsion to understand the workings of the world, you can stop reading here.

What the hell did you just do?

Key remapping gets confusing because of the indirection and terminology involved.

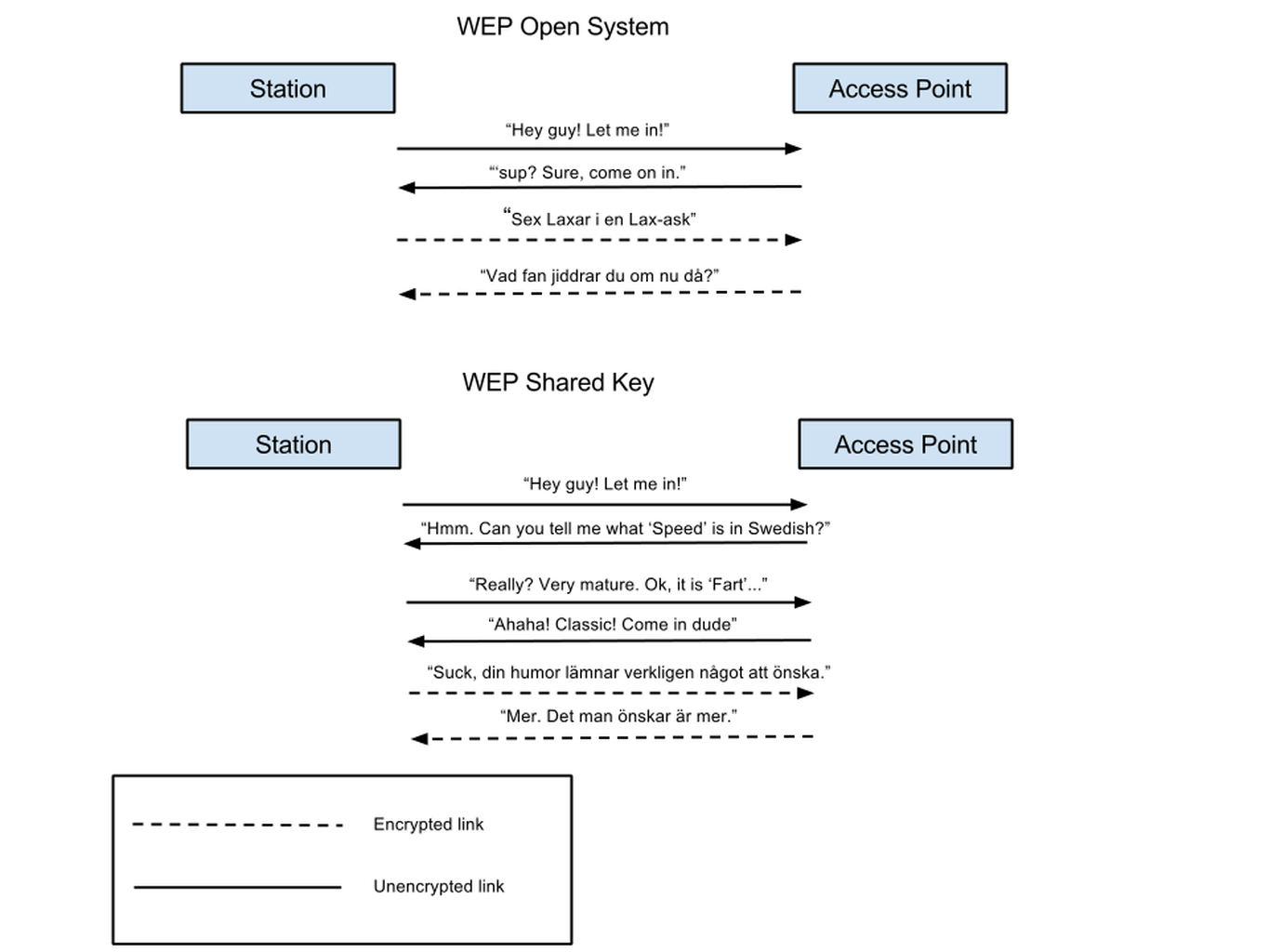

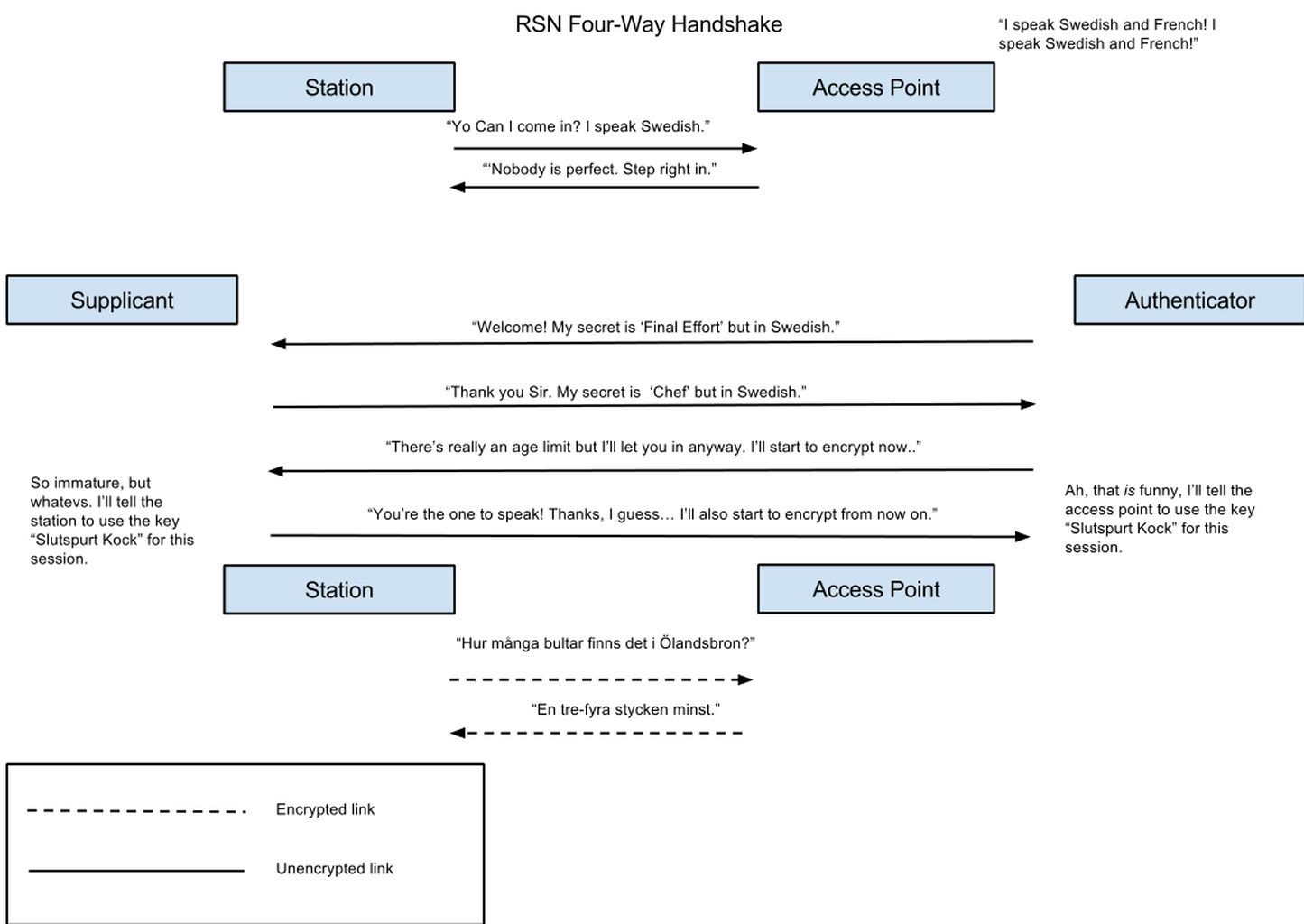

When you press a key on a keyboard then the keyboard will generate a scan code. The scan code is one or more bytes that identifies the physical key on the keyboard. The scan code also says if the key was pressed (called “make” for some obscure reason) or released (called “break”, probably for the same obscure reason). So far things are fairly simple. Different types of keyboards have different sets of scan codes. For example, a USB keyboard generates the this set of scan codes .

The scan code is converted by the operating system into a keycode. The keycode is one or more bytes, like the scan code. The difference between the scan code and the keycode is that the meaning of the scan code depends on the type of keyboard but the meaning of the keycode is the same for all keyboards.

A keycode also has a mapping to a key symbol or keysym. The keysym is a name that describes what the key is supposed to do. The keysym is used by applications to identify particular key presses, or by confused programmers who want to use Caps-Lock for something other than what God/IBM intended.

For example, if I press the ‘A’ key on my MacBook keyboard it will generate the scan code 0x04. I’m actually just guessing because 0x04 is the USB keyboard scan code for ‘A’ and I don’t know for sure that the internal keyboard in MacBook conforms to the USB keyboard standard, but let’s assume that it does.

The scan code 0x04 is translated by the operating system into the keycode 0x00 which is translated into the keysym kVK_ANSI_A.

If I would somehow manage to attach an old PS/2-keyboard to the MacBook and press the ‘A’ key on that, it would generate the scan code 0x1C which would be translated into the keycode 0x00 which would still be translated into kVK_ANSI_A.

Suppose that I’m writing an application and I want the ‘A’ key to activate some sort of spinning ball-type animation. My application would register an event handler for the kVK_ANSI_A key down event. This would then work correctly no matter what keyboard was used (as long as it actually had an ‘A’ key).

In addition to the normal keys some keys act like modifiers: holding them down changes the meaning of other keys. These keys are called modifiers (I know!). Typical modifier keys are the Shift, Control and Alt keys. Modifier keys can be regular modifiers that needs to be held down at the same time as the key being modified (like Shift normally behaves). Modifiers can also be Lock modifiers that toggle their function on and off (like Caps-Lock). There is a third variant called Sticky which behaves like a Lock modifier that is automatically unlocked after the next key-press, so that the modifier would only be applied to the next key. Sticky modifiers saves some key presses if the modifier is frequently applied to only a single key (like Shift) and rarely applied to several keys in a row (unlike rage-Shift).

So far, so general. Much of the tricky stuff happens in X so let’s look at how X handles key mapping.

X has a database with different key mappings. Each map converts keycodes to keysyms. These maps are useful because they allow you to remap the keyboard to different language layouts. My MacBook has a US keyboard. If I press the semicolon key it will generate keycode 47 and with a US keyboard map this will generate a semicolon character which is great for programming. On a Swedish keyboard the key in that same position instead generates an ‘Ö’, otherwise knowns as a “heavy metal O” (not really). If I’m not programming but, perhaps, working on the script for a Swedish erotic movie, then I would want the semicolon key to generate the ‘Ö’ character because a semicolon is a lot less useful in porn than you might think, while ‘Ö’ is widely considered the most erotic vowel (again: not really. ‘Ö’ is the sound you make when you say “Duh!” but without the ‘D’). With the Swedish keyboard map, keycode 47 generates an ‘Ö’ character instead of the semicolon.

So you can load different keyboard maps depending on what you want to do, effectively switching between different keyboard layouts or languages. You can load an entire map using the “setxkbmap” command, or you can modify the current keymap with the “xmodmap” command.

By default the “xmodmap” command lists the available modifiers and the keysyms assigned to them. For example

shift Shift_L (0x32), Shift_R (0x3e) lock Caps_Lock (0x42) control Control_L (0x25), Control_R (0x69) mod1 Alt_L (0x40), Alt_R (0x6c), Meta_L (0xcd) mod2 Num_Lock (0x4d) mod3 mod4 Super_L (0x85), Super_R (0x86), Super_L (0xce), Hyper_L (0xcf) mod5 ISO_Level3_Shift (0x5c), Mode_switch (0xcb)

Here we see the eight available modifiers. The assigned keysyms are listed together with their matching keycodes in parenthesis. In this case you can see that a single keysym (like Super_L) can be mapped to several keycode (0x85, 0xce).

“xmodmap -pke” lists the keycode-keysym mapping:

keycode 8 = keycode 9 = Escape NoSymbol Escape keycode 10 = 1 exclam 1 exclam …

So let’s walk through what we want to do.

The Xmonad Mod key is fortunately named because it works like a modifier key: it should only do something when combined with another key. The default key used as Mod in Xmonad is the left Alt key. Unfortunately the Alt key is perhaps the most important key in the whole universe if you run EMACS, which I do. So I really need Xmonad to use some other key for Mod. On normal keyboards you can usually find a couple of rarely used keys, Scroll-Lock and Print-Screen comes to mind, but the MacBook keyboard lacks these. The only really useless key (for me) is Caps-Lock. Caps-Lock is also a good choice because it is well placed on the keyboard.

Caps-Lock is a bad choice because it is weird. Let’s say that we want to use the left Control key for Mod in Xmonad. This would be easy to do, just get Xmonad to use the “mod3” modifier as the Mod key (see above) and then remap the Control_L keysym to the mod3 modifier using xmodmap:

- Clear all existing keysyms that might generate mod3 :

xmodmap -e “clear mod3”

- Remove the Control_L keysym from any existing modifier (or it will do multiple things which might be confusing):

xmodmap -e “remove control = Control_L”

- Assign the Control_L keysym to the mod3 modifier:

xmodmap -e “add mod3 = Control_L”

This process does not work with Caps_Lock. If you run the “xev”

program in an X terminal it will show you information about the mouse and keyboard events that are sent when you press keys (and move the mouse). What this program shows is that when the Caps-Lock key is pressed the X server will first send a Key Press event to say that the Caps-Lock key was pressed, immediately followed by a Key Release event to say that the key was released. The release event is sent right away, even if the key is being held down. This makes the Caps-Lock key not work as the Xmonad modifier since the modifier key needs to be held down to be combined with other keys.

I don’t know for sure who makes the Caps-Lock key behave in this way, OS X, VirtualBox or Linux/X, but I suspect that it is OS X. If I change the keysym being generated by the keycode (66) of the physical Caps-Lock key to another keysym then that other keysym behaves in the same way.

Fortunately there is a solution: get OS X to generate another keycode when the physical Caps-Lock key is pressed. Note the difference between keysym and keycode here. It is relatively straightforward to map the keycode that Caps-Lock generates to a keysym different from Caps_Lock, but as we saw in the previous paragraph that did not fix the key press/release problem. If we can manage to convince the OS to generate a different keycode when the Caps-Lock scan code arrives from the keyboard then noone will think that it is still has the “Caps-Lock magic”. It is easy to get the Caps-Lock key to generate the keycodes for Control, Command and Option; just open the keyboard preferences and click on the “Modifiers” button on the first tab. This works fine unless you happen to want to use all of those buttons for other things (I use them as Control, Alt and “Escape from the VM”).

If you want to remap Caps-Lock to a keycode that is not Control, Command or Option you’ll need a program called “Seil”. You then need to go into the Modifiers section of the keyboard preferences as just mentioned and change the Caps-Lock key to “No Action” and then you can use Seil to get the Caps-Lock key to generate a different keycode.

The trick now is to find a keycode that is unused both by OS X and by X. I picked the PC_APPLICATION keycode (110) because the keyboard does not have such a key. Open Seil and go to Settings->”Change the caps lock key” and check the “Change the caps lock key box”. Then change the “keycode” column to say “110”.

You can now go into your Linux virtual machine and run the “xev” program and verify that the Caps-Lock key generates the correct keycode. You will probably discover two things.

First, the Caps-Lock key does, in fact, not generate keycode 110. In my case it generates keycode 135 in X. This is not actually all that unexpected. Remember that X maps scan codes to keycodes and that the VM translates the keycode 110 that OS X sends it into some simulated scan code for whatever keyboard the VM emulates. X then takes that emulated scan code and translates it into a keycode that X feels comfortable with. With the keyboard layout that I use in X the PC_APPLICATION key is mapped to the keysym “Menu”, which seems reasonable.

The second thing your “xev” test may uncover is that keeping the key pressed will generate a stream of key press/release events. The keycode 135 has key repeat enabled. This is easily fixed, just run the command

xset -r 135

to disable key repeat for keycode 135.

Now we’re getting close. When we press the Caps-Lock key on the Mac the X server in the Linux VM generates a 135 keycode which by default is the “Menu” keysym. Now all we have to do is to assign the “Menu” keysym to the “mod3” modifier, which brings us back to our old friend xmodmap.

xmodmap -e “clear mod3” xmodmap -e “add mod3 = Menu”

And Bobs your uncle! Or not, as the case may be. But mission accomplished!

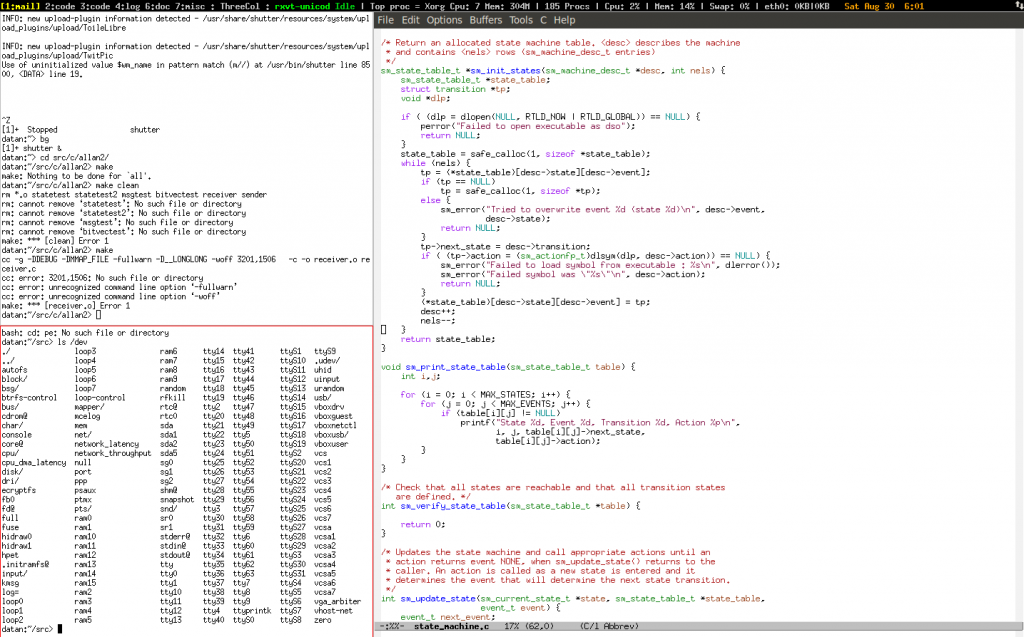

The changes to the X key mapping will be lost on reboot and every time the keymap is reloaded, such as when switching language layouts. For this reason I’ve put the X configuration in a script that I execute after switching layouts. I have a file called .xmodmap with the following contents :

clear mod3 add mod3 = Menu

A executable bash script bin/xmodmap.sh with the contents

xmodmap ~/.xmodmap xset -r 135

and then I run bin/xmodmap.sh in my .Xsession and every time I’ve switched keyboard layouts.

An additional useful thing is to add the line

keycode 134 = Pointer_Button2

at the end of the .xmodmap file. This will make the right Command button emulate the middle mouse button, which is nice if you use it for pasting things.

Finally, here are two illuminating links if you feel like digging deeper :

https://www.berrange.com/tags/scan-codes/

http://www.win.tue.nl/~aeb/linux/kbd/scancodes.html

Update

The Menu keysym can cause extra characters to occur so I had to switch it for Hyper_L instead. My input file to xmodmap became this:

keycode 207 = keycode 135 = Hyper_L clear mod4 clear mod3 add mod3 = Hyper_L